In recent years, analog photography experienced a revival. Film stocks are sold out, closed factories are reopened, the demand for analog films has doubled in the last five years . At the same time, Fujifilm reported record high earnings and an increase of 38.9% in their operating income in 2024 with its digital cameras known for their inbuilt film emulation. Undoubtedly, there is a demand to recreate the so-called “film look” that comes with pictures taken with analog film. This look is described by the different characteristics of analog and digital images for example in resolution and detail, dynamic range, noise and color rendition. In current solutions such as Fujifilm film emulation, the processing appears to be performed by fixed profiles which might work in certain scenarios, but are not image adaptive. In this work, we attempt to transform digital images into the look of various analogue film stocks, leveraging the power of generative artificial intelligence (AI) performing this style translation based on the input image. We focus on the aspect of color rendition with the goal of transforming images without a loss of resolution or image quality to make our work applicable in professional photography.

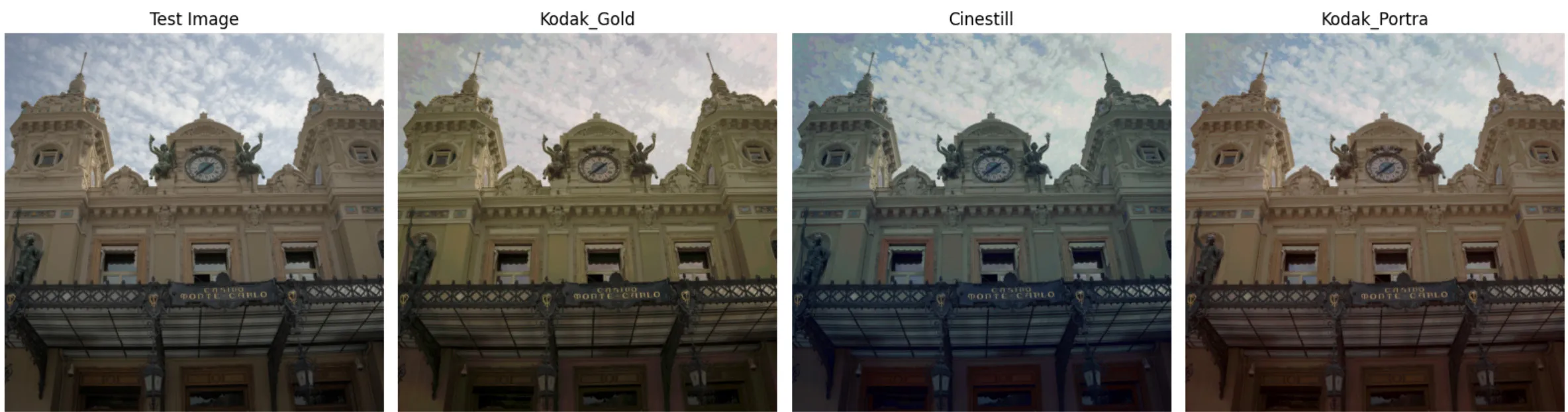

The final model is based on a StarGAN architecture. The following image shows the translation of a test image to the three film domains the model was trained on:

Further examples and the related Jupyter Notebooks are available in the Github Repository: https://github.com/ns144/3D-LUT

The paper is available here: Download